Table of contents

Background

CETAS is very similar to SQL CREATE TABLE TableName command. But, the table is created in Azure Data Lake and is called an External Table. External here means it’s not inside the Synapse SQL data warehouse (Fancy name: Dedicated SQL Pool).

If you have Synapse, why not save the data in Synapse SQL warehouse?

Because Synapse SQL warehouse storage is expensive and not meant for everyone to access.

Give me some real examples

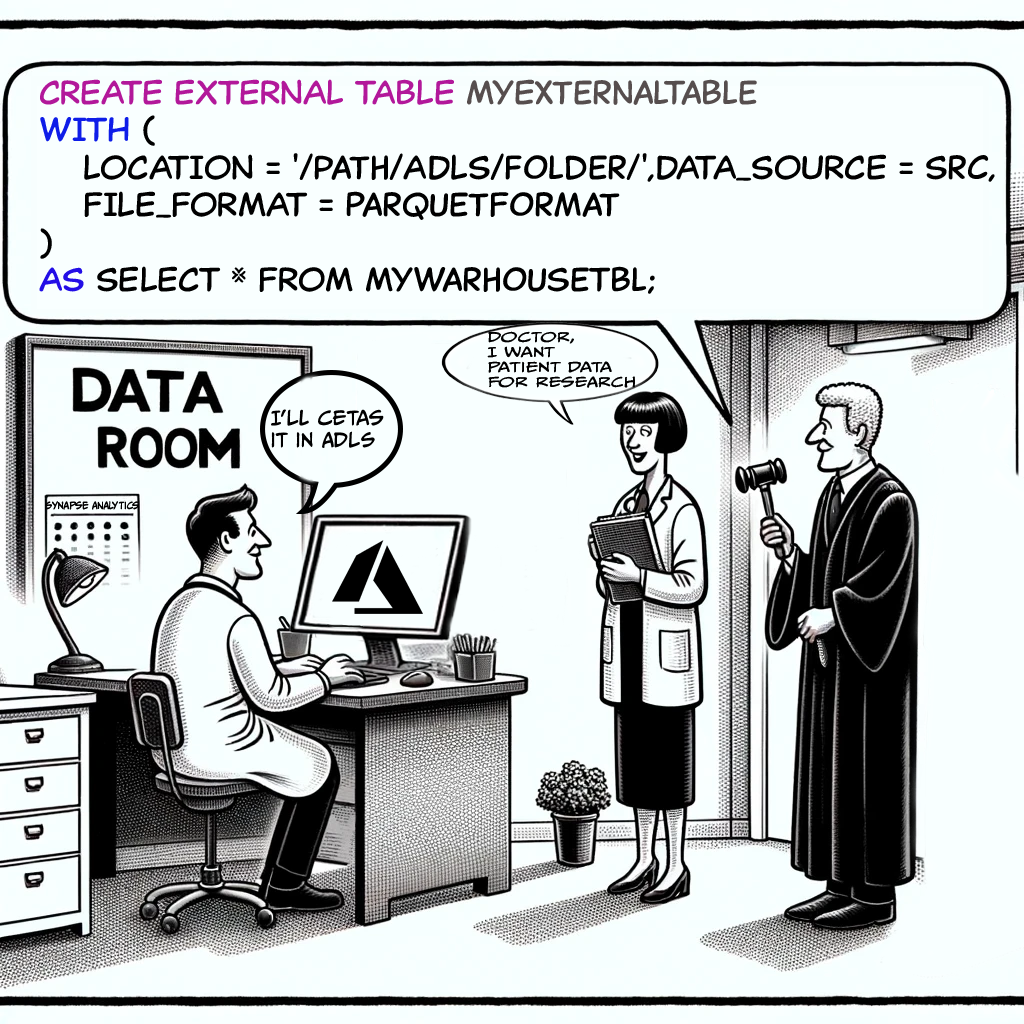

Removing Patient’s Identity and Sharing Their Data as ADLS Tables

A hospital wants to share patient data stored in its Synapse SQL warehouse with researchers. Will they let the entire world access their Synapse warehouse? No way, that would land them in jail. Patient data can’t be shared directly. So, they create CETAS in Azure Data Lake with data from their SQL warehouse.

Benefits: Avoids jail time and helps the research world make new medicines.

Inlet wants to store chip Sensor data and Share as Tables for university students

Inlet company collects a huge amount of sensor data from chips in ADLS. They have an external university research team that wants to analyze it. The in-house team connects to Synapse using PySpark to clean the data. Then, using Synapse SQL (serverless), they create neat, report-ready CETAS tables in ADLS. The external university is given access to these tables only, keeping Inlet’s internal Synapse warehouse out of the entire process.

Hence, CETAS is an important command for creating external tables and storing the data from your SQL query permanently as tables in ADLS.

CETAS Scenarios

Pull Data from Synapse Warehouse and put It in ADLS CETAS Tables

Suppose you are a data engineer with access to both the Azure Data Lake Gen2 account and the Synapse workspace. The patient records are present in the SQL warehouse, and you want to export this data into ADLS as a table.

Get the access sorted

-

Enable Managed Identity for Synapse Workspace:

Here, we will use Managed Identity. Why? Because it’s the simplest, and Azure handles everything for us. But there are other options for access too, like the Service Principal method and the SAS method.

- Go to your Synapse workspace in the Azure portal.

- Under the “Identity” section, ensure the “System-assigned managed identity” is enabled.

-

Grant Access to Managed Identity on ADLS Gen2:

- Go to your ADLS Gen2 account in the Azure portal.

- Navigate to the “Access Control (IAM)” section.

- Click on “Add role assignment.”

- Assign the role “Storage Blob Data Contributor” to the managed identity of your Synapse workspace.

Custom DB to store connection info - Serverless SQL only

When using CETAS with a serverless SQL pool, don’t use the built-in database for connection info, credentials, or file formats. Instead, create a new database to keep things organized. For a dedicated SQL pool, you can use the built-in database.

-

Create Custom Database:

CREATE DATABASE MyCustDbForCETASInfo; -

Use Custom Database:

USE MyCustDbForCETASInfo;

Pull-from-Warehouse and Put-in ADLS CETAS

Next, create an external table in ADLS Gen2 using managed identity for authentication.

-

Create Database Scoped Credential:

USE MyCustDbForCETASInfo; CREATE DATABASE SCOPED CREDENTIAL MyADLSCredential WITH IDENTITY = 'Managed Identity'; -

Create External Data Source:

CREATE EXTERNAL DATA SOURCE MyADLS WITH ( TYPE = HADOOP, LOCATION = 'abfss://myfilesystem@myadlsaccount.dfs.core.windows.net', CREDENTIAL = MyADLSCredential ); -

Create External File Format:

CREATE EXTERNAL FILE FORMAT ParquetFormat WITH ( FORMAT_TYPE = PARQUET ); -

Create and fill the CETAS Table:

CREATE EXTERNAL TABLE SalesDataExternal WITH ( LOCATION = '/salesdata/', DATA_SOURCE = MyADLS, FILE_FORMAT = ParquetFormat ) AS SELECT * FROM SalesData;

Pull Data from ADLS and Pur as ADLS CETAS Tables

Like the Inlet company case study, let’s say you have lots of .parquet files as data. You want to clean this data and create tables in ADLS itself using Synapse. To do this follow these steps,

Get the access sorted

The creation of managed identity etc have been left out. As I explained them before.

Pull from ADLS & Put in ADLS as CETAS

-

Create Database Scoped Credential:

CREATE DATABASE SCOPED CREDENTIAL MyADLSCredential WITH IDENTITY = 'Managed Identity'; -

Create External Data Source:

-- Create an external data source for the Azure storage account CREATE EXTERNAL DATA SOURCE MyADLS WITH ( TYPE = HADOOP, -- For dedicated SQL pool -- TYPE = BLOB_STORAGE, -- For serverless SQL pool LOCATION = 'abfss://myfilesystem@myadlsaccount.dfs.core.windows.net', CREDENTIAL = MyADLSCredential ); -

Create External File Format:

CREATE EXTERNAL FILE FORMAT ParquetFormat WITH ( FORMAT_TYPE = PARQUET ); -

Query Data from ADLS:

SELECT * FROM OPENROWSET( BULK 'abfss://myfilesystem@myadlsaccount.dfs.core.windows.net/path/to/data/', FORMAT = 'PARQUET' ) AS [result] -

Create the CETAS Table:

CREATE EXTERNAL TABLE ProcessedDataExternal WITH ( LOCATION = '/processeddata/', DATA_SOURCE = MyADLS, FILE_FORMAT = ParquetFormat ) AS SELECT * FROM OPENROWSET( BULK 'abfss://myfilesystem@myadlsaccount.dfs.core.windows.net/path/to/data/', FORMAT = 'PARQUET' ) AS [result];

Service Principal Method and SAS Methods

We used managed identity in our examples. Apart from Managed Identity, you have a couple of other options for connecting Synapse to Azure Data Lake Storage (ADLS):

-

Service Principal Authentication: A service principal is like a special user for applications to access Azure resources. You create a service principal and give it the needed permissions on ADLS. Its like functional id.

-

Shared Access Signature (SAS): A Shared Access Signature (SAS) allows limited access to your storage account for a specific time and with specific permissions.

Here’s how you can set up each method:

Service Principal Authentication Setup

- Create a Service Principal: You can create a service principal using the Azure portal, Azure CLI, or PowerShell. Here is an example using Azure CLI:

az ad sp create-for-rbac --name <service-principal-name> --role "Storage Blob Data Contributor" --scopes /subscriptions/<subscription-id>/resourceGroups/<resource-group>/providers/Microsoft.Storage/storageAccounts/<storage-account> - Grant Access to the Service Principal on ADLS Gen2: Assign the necessary role to the service principal:

az role assignment create --assignee <appId> --role "Storage Blob Data Contributor" --scope /subscriptions/<subscription-id>/resourceGroups/<resource-group>/providers/Microsoft.Storage/storageAccounts/<storage-account> - Configure the External Data Source in Synapse: Use the service principal credentials in your SQL script:

CREATE DATABASE SCOPED CREDENTIAL MyADLSCredential WITH IDENTITY = 'service-principal-id', SECRET = 'service-principal-password'; CREATE EXTERNAL DATA SOURCE MyADLS WITH ( TYPE = HADOOP, LOCATION = 'abfss://myfilesystem@myadlsaccount.dfs.core.windows.net', CREDENTIAL = MyADLSCredential );

Shared Access Signature (SAS) Setup

- Generate a SAS Token: You can generate a SAS token through the Azure portal, Azure Storage Explorer, Azure CLI, or programmatically using Azure Storage SDKs. Here is an example using Azure CLI:

az storage account generate-sas --permissions rwdlacup --account-name <storage-account> --services b --resource-types co --expiry <expiry-date> - Configure the External Data Source in Synapse: Use the SAS token in your SQL script:

CREATE DATABASE SCOPED CREDENTIAL MyADLSSASCredential WITH IDENTITY = 'SHARED ACCESS SIGNATURE', SECRET = 'sas-token'; CREATE EXTERNAL DATA SOURCE MyADLS WITH ( TYPE = HADOOP, LOCATION = 'abfss://myfilesystem@myadlsaccount.dfs.core.windows.net', CREDENTIAL = MyADLSSASCredential );

Managed Identity is usually recommended because it’s easy to use and secure, but Service Principal and SAS can be useful in certain situations where Managed Identity might not work.

Alternative to CETAS?

1. Azure Synapse Spark Pools

Spark capability, pyspark-notebooks comes with Synapse. You can use spark pools in Synapse. This can be more efficeint than JDBC for huge volume of data handling.

from pyspark.sql import SparkSession

# Initialize Spark session

spark = SparkSession.builder \

.appName("SynapseSparkPoolExample") \

.getOrCreate()

# Read data from ADLS

df = spark.read \

.format("parquet") \

.load("abfss://myfilesystem@myadlsaccount.dfs.core.windows.net/path/to/data/")

# Process the data

processed_df = df.filter(df['SalesDate'] >= '2023-01-01')

# Write the processed data back to ADLS

processed_df.write \

.mode("overwrite") \

.format("parquet") \

.save("abfss://myfilesystem@myadlsaccount.dfs.core.windows.net/processeddata/")

# Register the Parquet files as a table in the Spark session

spark.sql("CREATE EXTERNAL TABLE IF NOT EXISTS SalesDataExternal USING parquet LOCATION 'abfss://myfilesystem@myadlsaccount.dfs.core.windows.net/processeddata/'")

2. Azure Data Factory (ADF)

ADF is one of the core component of Synapse. It’s main job is to tranfer data from here to there. This can be a preferred option to avoid lengthy coding etc. Typical steps to perform the activity would be:

- Create a pipeline: This is the workflow for the entire activity.

- Use Copy Activity: This is a very important activity to copy data and used frequently in pipelines.

3. PolyBase

PolyBase is a technology for MSSQL Server. It allows you to query external data as if it were part of the database.

In Synapse we created CETAS etc. We were already using Polybase technology.

Let’s test our knowledge

- What function is used to read the data in files stored in a data lake?

- FORMAT

- ROWSET

- OPENROWSET

Answer: OPENROWSET

-

What character in file path can be used to select all the file/folders that match rest of the path?

- &

- *

- /

Answer: *

-

Which external database object encapsulates the connection information to a file location in a data lake store?

- FILE FORMAT

- DATA SOURCE

- EXTERNAL TABLE

Answer: DATA SOURCE

Based on the given ferocity rankings for the animals, here’s how you can create an output table: