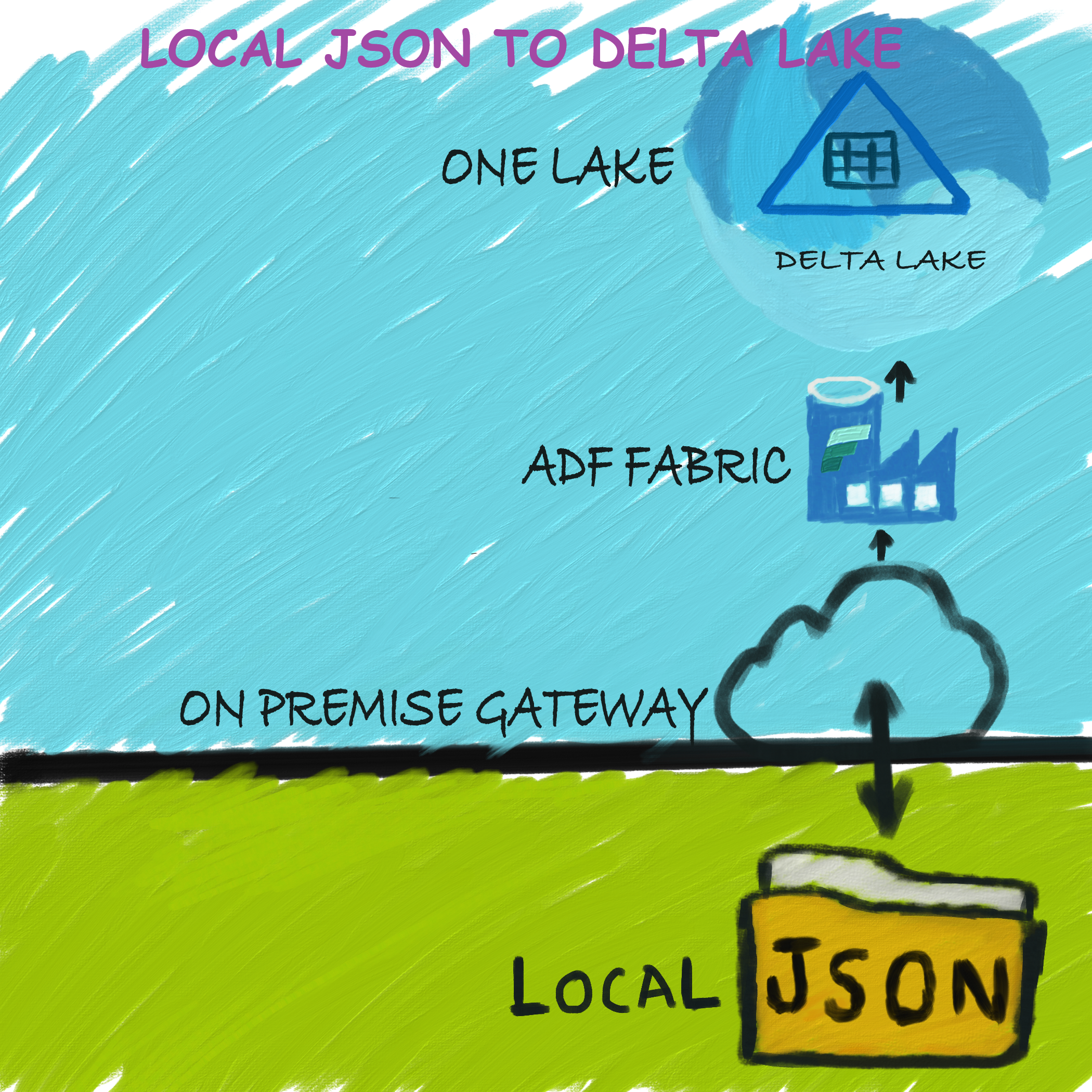

Background

Here, I’ll show you two things:

- How to connect Fabric or Azure Data Factory (ADF) to your local file system using an on-premises gateway.

- How to use a simple, no-code method to automatically copy JSON files into a Delta Lake table.

Let’s start

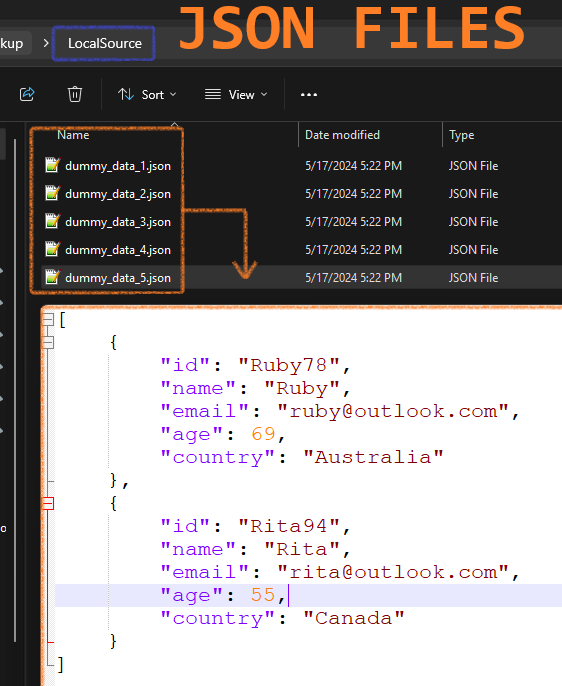

Prepare the Local Files and On-Premises Gateway

- Download the sample JSON files from here and place them in a local folder on your computer.

- Install the on-premises gateway. It’s straightforward and easy to install. You can find detailed instructions here.

Note: Ensure that an admin account has all permissions in the security tab of the local folder. While not always practical, admin access simplifies the setup and reduces complications.

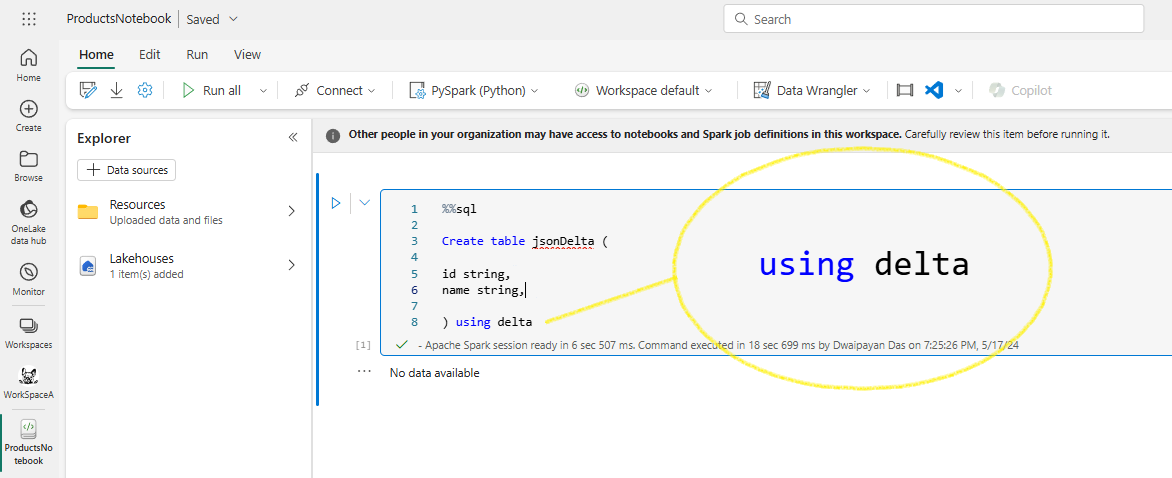

Create a Delta Lake Table to Store the JSON Data

Create a notebook and run the following SparkSQL code:

%%sql

Create table jsonDelta (

id string,

name string,

email string,

age int,

country string

) using delta

Remember: using delta at the end.

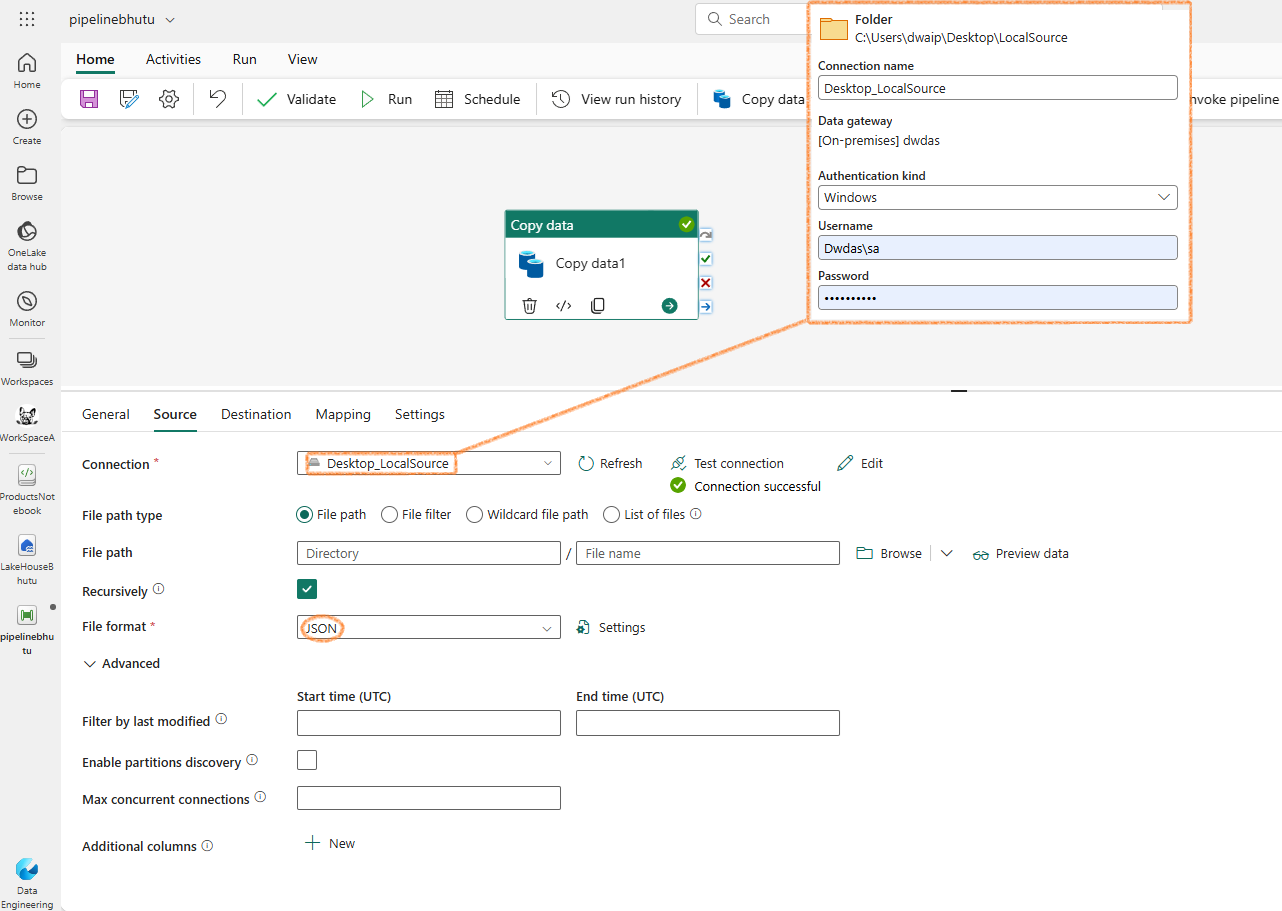

Set Up the Copy Data Process

- Source Setup: Follow the diagram to set up the source. Ensure the admin account has the right permissions for the source folder.

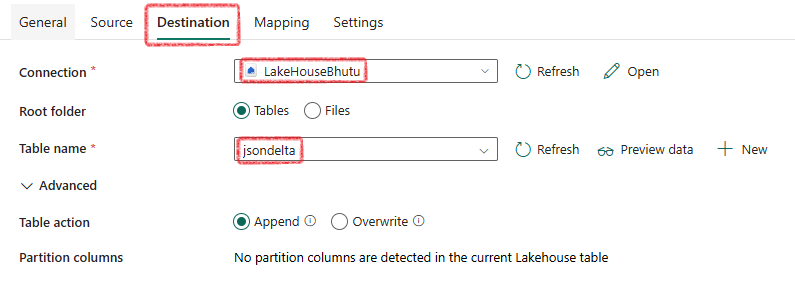

- Destination Setup: Follow the diagram for the destination setup.

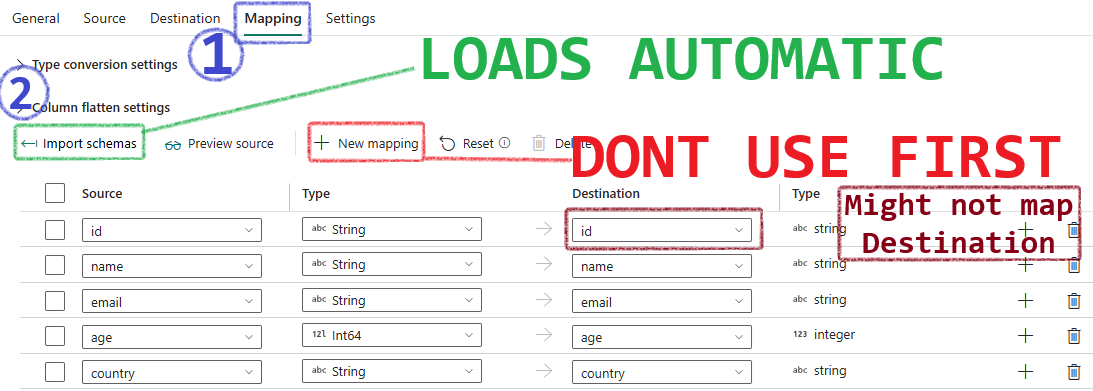

- Mapping Setup: Follow the diagram to set up the mapping.

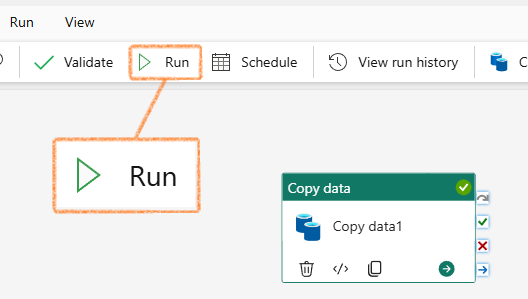

Run the Pipeline

Click “Run.” The pipeline will process all the JSON files and add the data to the Delta Lake table. That’s it!

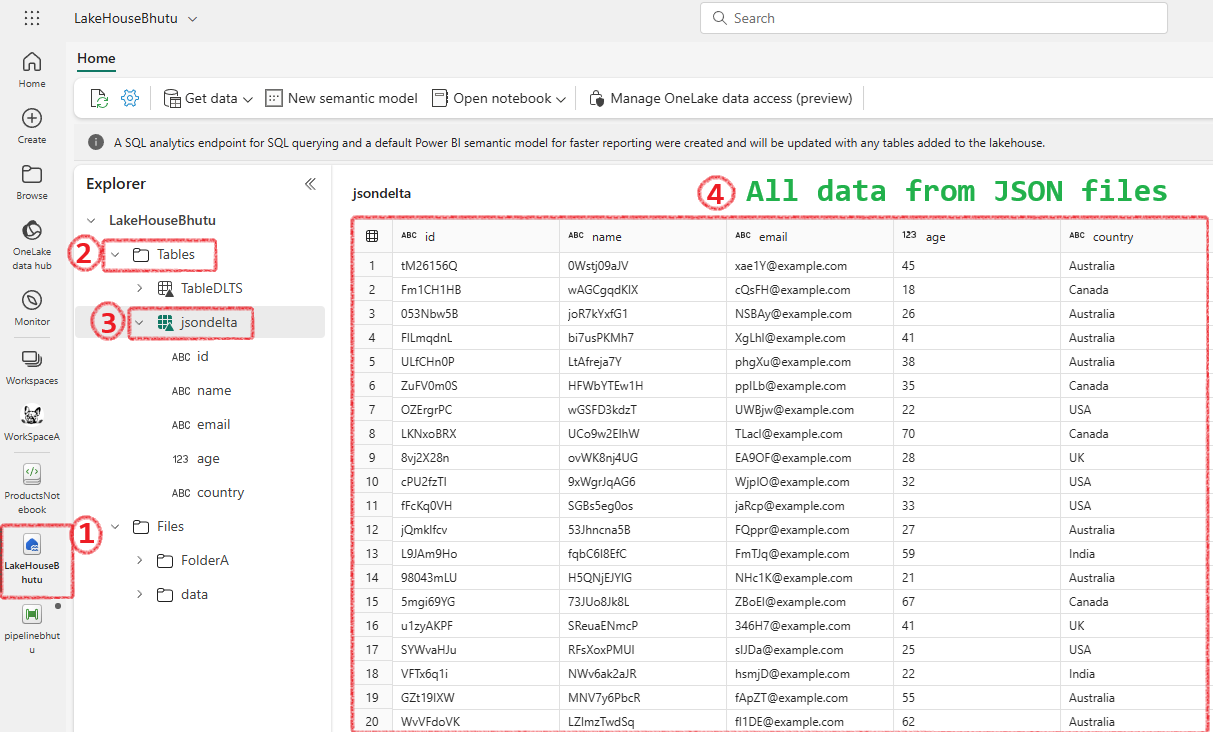

Check the Delta Lake Table Data

Go to the lakehouse, expand the table, and you will see that all the JSON data has been loaded into the Delta Lake table.

Conclusion

I hope I was able to show how nearly no-code, simple, and straightforward it is to load data from your local system into a Delta Lake in Fabric.