How many different Workflows can you have for Real-Time Data Processing?¶

Real-time data processing can be quite confusing because there are so many tools and services. But let’s make it simple. We’ll look at different ways to handle real-time events, like tweets, and see how they work with open-source tools, Azure, Aws, Google Cloud and Databricks.

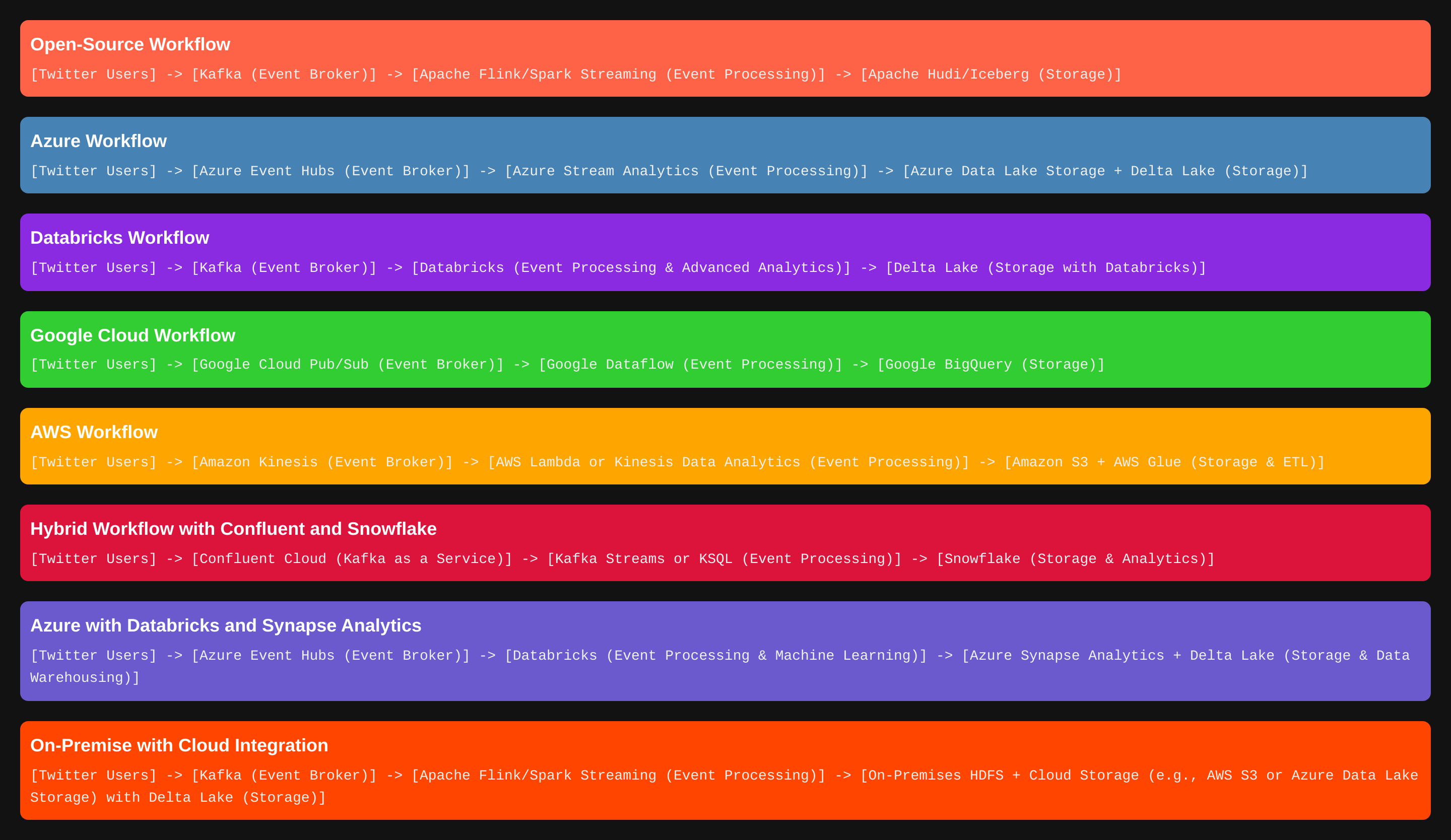

Open-Source Workflow¶

In this setup, everything is open-source, giving you full control over the technology stack.

[Twitter Users] -> [Kafka (Event Broker)] -> [Apache Flink/Spark Streaming (Event Processing)] -> [Apache Hudi/Iceberg (Storage)]

Here’s how it works: - Kafka: Acts as the middleman, receiving and managing events (tweets). - Apache Flink/Spark Streaming: These tools process the data in real-time, filtering or enriching tweets as needed. - Apache Hudi/Iceberg: These storage solutions handle storing the processed data, offering features like time travel and ACID transactions, similar to Delta Lake but in the open-source world.

Azure Workflow¶

This workflow leverages Azure’s managed services for a streamlined, cloud-based solution.

[Twitter Users] -> [Azure Event Hubs (Event Broker)] -> [Azure Stream Analytics (Event Processing)] -> [Azure Data Lake Storage + Delta Lake (Storage)]

Here’s the breakdown: - Azure Event Hubs: This is Azure’s version of Kafka, handling real-time data ingestion. - Azure Stream Analytics: Processes the tweets as they come in, performing tasks like filtering and aggregation. - Azure Data Lake Storage + Delta Lake: Stores the processed data in a scalable and efficient way, allowing for further analysis and querying.

Databricks Workflow¶

This workflow combines the power of Databricks with Delta Lake for advanced analytics and machine learning.

[Twitter Users] -> [Kafka (Event Broker)] -> [Databricks (Event Processing & Advanced Analytics)] -> [Delta Lake (Storage with Databricks)]

Here’s how it functions: - Kafka: As usual, Kafka receives and queues the events. - Databricks: Handles the heavy lifting of real-time processing and advanced analytics, including machine learning if needed. - Delta Lake: Integrated with Databricks, Delta Lake stores the data efficiently, allowing for complex queries and historical data analysis.

Google Cloud Workflow¶

If you’re into Google Cloud, this setup might be right for you.

[Twitter Users] -> [Google Cloud Pub/Sub (Event Broker)] -> [Google Dataflow (Event Processing)] -> [Google BigQuery (Storage)]

AWS Workflow¶

Here’s a combination using Amazon Web Services for a fully managed experience.

[Twitter Users] -> [Amazon Kinesis (Event Broker)] -> [AWS Lambda or Kinesis Data Analytics (Event Processing)] -> [Amazon S3 + AWS Glue (Storage & ETL)]

Hybrid Workflow with Confluent and Snowflake¶

This setup combines a managed Kafka service with Snowflake’s cloud data platform.

[Twitter Users] -> [Confluent Cloud (Kafka as a Service)] -> [Kafka Streams or KSQL (Event Processing)] -> [Snowflake (Storage & Analytics)]

Azure with Databricks and Synapse Analytics¶

This combination leverages Azure’s data services for powerful analytics.

[Twitter Users] -> [Azure Event Hubs (Event Broker)] -> [Databricks (Event Processing & Machine Learning)] -> [Azure Synapse Analytics + Delta Lake (Storage & Data Warehousing)]

On-Premise with Cloud Integration¶

If you’re starting on-premise but want to integrate with the cloud, here’s an option:

- Kafka: Manages your events locally. - Apache Flink/Spark Streaming: Processes the events on-premise. - On-Premises HDFS + Cloud Storage: You can store the data locally on HDFS or integrate it with cloud storage services like S3 or Azure Data Lake Storage, using Delta Lake for additional features like ACID transactions.