dropna & fillna - Handling missing values in dfs¶

In PySpark dataframes, missing values are represented as NULL or None. Here, I will show you how to handle these missing values using various functions in PySpark.

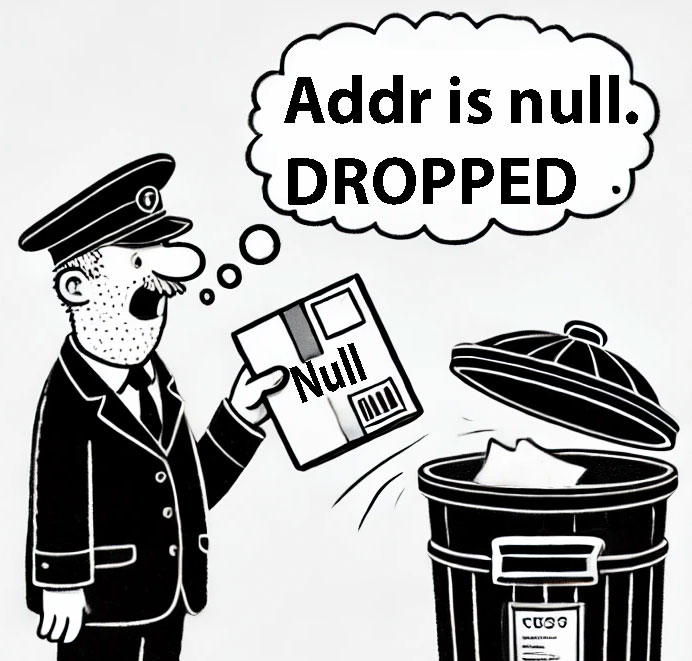

Dropping Rows with Null Values¶

- Drop Rows with Any Null Values:

This will drop rows that have even one null value.

-

Drop Rows Where All Values Are Null:

This will drop rows where all values are null. -

Drop Rows with Null Values in Specific Columns:

Drop rows if country OR region have null values

df = df.dropna(subset=["country", "region"])

# Alternative: df.na.drop(subset=["country", "region"])

Filling Missing Values¶

- Fill Null Values in Specific Columns:

If the

pricecolumn has null values, replace them with0. If thecountrycolumn has null values, replace them with"unknown". - Using a Dictionary

Replacing Specific Values¶

-

Using replace:

This will replaceNone(null) values in thecountrycolumn with"godknows". -

Using withColumn, when & otherwise:

This will replace null values in thefrom pyspark.sql.functions import when df = df.withColumn("country", when(df["country"].isNull(), "godknows").otherwise(df["country"]))countrycolumn with"godknows". -

Using Filter